AI’s Ethical Dilemma: Can We Trust Machines to Make Moral Decisions?

AI’s Ethical Dilemma: Can We Trust Machines to Make Moral Decisions?

Artificial Intelligence (AI) has evolved over the years and has automated tedious tasks, revolutionizing industries like healthcare and finance, and completely changing our daily routines. Amid its efficiency, speed, and ability to process information beyond human limits, the question arises

Can AI be trusted to make unsupervised moral decisions?

How AI Makes Decisions

AI makes decisions by analyzing large amounts of data through pattern recognition, statistical analysis, and mathematical optimization.

- Predictive Analysis: AI uses Machine Learning (ML) algorithms to analyze data and learn patterns which it later applies to new and unlearned data to make predictions about future trends related to sales forecasting and demand planning. This insight allows businesses like Amazon to manage inventory better, improve product handling, and guarantee they have enough stock to satisfy customer demands.

- Natural Language Processing: NLP is a subfield of AI that allows machines to comprehend, interpret, and generate human language. This can be achieved by sentimental analysis (analyzing and identifying the embedded tone of the documents), summarization (sifting through lengthy documents and outlining the context), customer feedback analysis, and addressing the queries of the users.

- Fraud Detection and Prevention: AI acts as a pattern-recognizing system by analyzing large volumes of data and user activity and identifying suspicious behavior and potentially fraudulent activities. This helps to secure payments and prevent thefts and phishing scams.

However, the problem arises when AI systems are influenced by biased data. If the data used to train the AI is incomplete or biased, the AI’s decisions will reflect those same biases. For example, AI facial recognition systems have been found to have trouble recognizing people with darker skin tones because the training data was predominantly made up of lighter-skinned individuals which can eventually have serious consequences like false arrests and wrongful deportations.

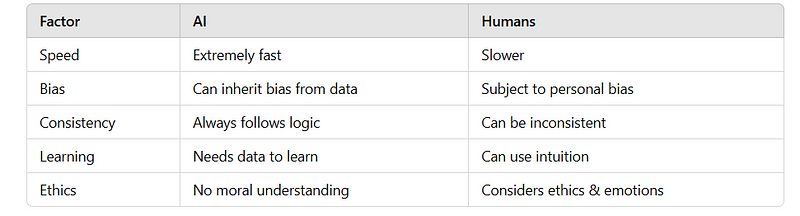

AI vs Human Decision-Making

The Challenge of Teaching Morality to Machines: Real-World Examples

At the heart of this issue lies the actual difference between data-driven decisions and moral judgments. While human morality is affected by culture, upbringing, and emotions, AI relies heavily on information and pre-programmed guidelines and lacks human emotions. Instilling morality in a machine is particularly a daunting task because ethical decisions rarely fall into clear-cut categories.

The Trolley Problem

During an unavoidable road accident, a self-driving car that is fully pre-programmed must decide whether to save the passenger or the pedestrians, unlike a human driver who works entirely on instincts. This ethical dilemma also known as the Trolley Problem, poses a hypothetical problem in which a person must choose from two negative outcomes. This raises uncomfortable questions about how AI should be programmed to handle life-and-death situations. Should AI always prioritize the greater good, or should it protect its owner above all? Perhaps companies like Tesla and Mercedes-Benz must program AI to make these real-life decisions, yet the question remains unanswered — Who sets the moral guidelines for AI? And once those guidelines are established, who is accountable if something goes wrong?

AI in Hiring Decisions: Fair or Flawed?

Since AI is expected to make the hiring process more efficient and fair in comparison to humans, companies use AI-driven recruitment tools to screen resumes, conduct interviews, and make hiring decisions. Some instances, however, have pointed out that this theory is rather flawed and not reliable in every case as AI learns from historical data, which may reflect biases. For example, Online giant Amazon built an experimental hiring tool that was supposed to rank the job candidates to identify the required talent pool and discard the rest; automation for the greater good. For this, the system was trained on a decade’s worth of data. However, due to ‘male dominance’ and a lesser proportion of women candidates, the AI favored candidates who used action verbs like “executed” and “captured” which in this case, were predominantly associated with the male engineers’ CVs. Furthermore, the AI automatically ranked resumes lower if they included the word “women’s,” as in “women’s chess club captain.” and even downgraded candidates from two all-women’s colleges. This bias had an unsettling impact on people and was scrapped off later for being ‘gender-biased’.

This brings up an important ethical issue: If AI is trained on biased data, can it really make fair choices? To tackle this, companies are focusing on methods to reduce bias, like:

- Diverse training data: Providing AI with more balanced and representative datasets.

- Explainable AI (XAI): Creating AI models that can explain their decisions, helping companies spot and fix biases.

- Human-AI collaboration: Using AI as a support tool rather than as the main decision-maker in hiring.

However, the core problem persists: Is it possible for AI to be truly neutral in an inherently biased society?

Medical AI: Making Life-or-Death Decisions

AI is increasingly being used in hospitals to prioritize patients, manage resources, and even suggest treatments. However, It is noted that AI is likely to prioritize younger patients over older ones, with the assumption that they have more future years to live. Patients who have complex medical conditions are likely to be less prioritized over patients with common problems due to lower past survival rates, which restricts new treatment avenues. A similar bias was observed in algorithms analyzing heart failure, cardiac surgeries, and vaginal births after cesarean sections (VBAC). These algorithms resulted in Black patients undergoing more cesarean deliveries than needed. The prediction was flawed, suggesting that minority groups were less likely to succeed with vaginal births after having a C-section when compared to non-Hispanic white women.

These biases signify that AI-based decisions do not solely have to be data-driven. Rather, they should incorporate empathy, fairness, and ethics. This does not mean AI should be abandoned; rather, it should be integrated with human oversight to ensure effective decision-making.

How Can We Make AI More Ethical?

As we continue to rely on AI for decision-making, it’s important to address the ethical questions that come with it. Here are some ways AI can be designed to make more ethical decisions:

1. Adding Ethical Guidelines to AI Systems

Understanding the concerns of AI while ensuring that it acts in the best interest of people, free from biases related to caste, gender, or race is the first step towards the formation of effective ethical guidelines for AI systems. One way to ensure AI makes moral decisions is to introduce it to fundamental values of transparency and accountability by instilling these guidelines directly into the AI’s programming. This can further mitigate environmental risks and ensure diversity and inclusion while avoiding bias. For example, a self-driving car could be designed to focus on reducing harm to everyone involved, instead of just protecting one group at the expense of another.

2. Human Oversight

To ensure the safety, transparency, and fairness of AI systems, is to keep human oversight in the loop. In this system, AI helps make decisions, but humans have the final say. Since even the most sophisticated systems are prone to errors and biases, meaningful human oversight is important to mitigate legal and ethical risks. For example, while AI can suggest if a patient should get a medical procedure done, consulting a doctor for the same could be a better decision, considering not only the patient’s medical history but also their social and emotional needs.

3. Transparency and Explainability

Another approach is to make AI decisions explainable. Developing algorithms that explain the rationale behind every decision taken can ensure greater reliability. For instance, if an AI system is responsible for ranking job candidates, it should be able to explain why a particular candidate was chosen. This transparency helps ensure that AI decisions can be trusted and held accountable.

4. Training AI on Diverse and Unbiased Data

AI’s fairness depends on the quality of training data. If the data is biased and skewed, it will most likely mirror or worsen those biases. To avoid this, Companies should regularly audit their data to rule out any discriminatory data and take feedback from ethicists, sociologists, legal experts, and not only engineers. Additionally, they should use data with varied information, reflecting the real population.

The following video explains in a brilliant way how we can make AI more ethical:

The Responsibility Dilemma: Who’s Accountable for AI’s Mistakes?

When AI makes a mistake, who should be held responsible? Is it:

- The developers who designed the algorithm?

- The company that deployed it?

- The machine itself?

The Legal and Ethical Gray Area

AI does not have intentions as humans do, rather it runs on commands. However, When AI-driven systems make a harmful decision, determining liability becomes a legal and ethical challenge.

For example:

- If a self-driving car gets into an accident, should the car maker face lawsuits, or should the AI in the car be blamed?

- If an AI loan approval system wrongfully rejects loans for minority applicants, should the company be held legally responsible?

Current laws are struggling to keep up with these challenges, making AI regulation a critical issue for the future.

Can AI Ever Truly Be Ethical?

Many researchers believe AI can be designed to follow ethical principles, but which ethical framework should it use?

1. Utilitarian Ethics (The Greatest Good for the Most People)

Utilitarianism is a theory of ethics that focuses on the morally right action which produces the most good. AI could be programmed to maximize overall benefit, even if it means making hard choices. For example, a self-driving car might have the option to save either five pedestrians or one passenger in an unavoidable accident. In the case of a purely utilitarian approach, it might sacrifice one passenger to save five pedestrians, who are mathematically more valuable. However, this approach raises some serious concerns. What should be the basis for deciding the ‘greater good?’ Just because something benefits the majority, Is it acceptable? Would humans be okay with machines weighing their lives against statistical calculations?

2. Deontological Ethics (Follow the Rules, No Exceptions)

In contrast to utilitarian ethics, this approach would make AI follow strict rules without any calculations, regardless of the consequences. For example, AI in recruitment could be programmed to discriminate based on gender or race, even if the past data suggests that one gender fits a role better. However, this approach creates a rigid, one-size-fits-all policy, which might not work for complex real-life problems, like how will it deal with conflicting rules? Should a hospital AI prioritize patient confidentiality or report a serious concern to the authorities? While this approach ensures consistency, real-life morality requires flexibility which is something that AI often struggles with.

3. Human-Centric AI (Keeping Humans in the Loop)

Human-Centric AI is a far more balanced approach that involves both AI and humans in making decisions, rather than coming to individual conclusions. Some experts argue that AI should never make final moral decisions. Instead, it should give suggestions, and ensure transparency about its recommendations and how they were reached while humans retain the final say. For example, rather than deciding who gets the final treatment in a hospital, an AI can provide data-driven insights to the doctors, which might aid them in reaching a morally correct judgment. This ensures that ethical responsibility remains with people, not machines. However, like other approaches, this approach has its own set of questions- In time-bound situations that require immediate effect, will human oversight slow down these decisions? What if AI recommendations influence humans too much?

The Future of AI Ethics: Striking a Balance

As AI continues to evolve, we must strike a balance between innovation and ethical responsibility. The challenge doesn’t just lie with teaching AI to recognize ethical dilemmas, but also with teaching it deeper human qualities like compassion and empathy. This means that no single framework, be it — utilitarian, deontological, or human-centric ethics can fully govern AI ethics and have their own set of limitations. The best approach to safeguard these ethical practices is incorporating a hybrid model of:

- Utilitarian insights to maximize the benefits and minimize the harm.

- Deontological safeguards to ensure consistency and emphasize moral duties, rights, and principles that must be upheld.

- Human oversight to keep ethical responsibility where it belongs — with us.

Artificial intelligence is not capable of experiencing human emotions or possessing a personal moral compass; nevertheless, it can be designed to follow ethical guidelines, contingent upon humans taking responsibility for its development and oversight.

Conclusion: Should We Trust AI with Moral Choices?

While AI’s potential is limitless, these ethical dilemmas cannot be ignored. Since AI cannot understand human emotions and moral responsibilities, its role in decision-making should be limited and the final responsibility of making well-thought decisions should always rest with humans.

So, can we fully trust AI to make moral choices? Probably not. But by implementing robust regulations, refining training data, and ensuring continuous human intervention, we can build AI that serves as a powerful aid rather than an unchecked decision-maker.

What’s your take on AI’s ethical dilemma? Should we trust machines with moral decisions, or should humans always have the final say?

Ready to build your tech dream team?

Check out MyNextDeveloper, a platform where you can find the top 3% of software engineers who are deeply passionate about innovation. Our on-demand, dedicated, and thorough software talent solutions are available to offer you a complete solution for all your software requirements.

Visit our website to explore how we can assist you in assembling your perfect team.