How to Evaluate LLM Models: Metrics, Benchmarks & What They Really Mean

How to Evaluate LLM Models: Metrics, Benchmarks & What They Really Mean

The AI wars are on. But if every model claims to be the best — how do you actually know which one wins in the real world?

But yet another LLM (Large Language Model) grabs the week’s headlines. GPT-4o releases a live demo that becomes viral on the internet. Google comes back with Gemini 2.5 Pro, boasting multimodal dominance. Meta, Anthropic, Mistral — each one of them jumps into the action.

But that is the trap: Most judge such models by flashy numbers or leaderboard ranking. And that’s a trap.

Because of actual performance? It is contextual. It is nuanced. It is not a one-number problem — it is use case, cost, ethics, consistency, and trust.

Let’s dissect just how precisely to actually assess LLMs in 2025 — beyond the hype.

1. Benchmarks: The Tests Everyone Cites (But Few Take)

Picture benchmarks as check-ups. Useful-but finite.

These are the top suspects:

- MMLU (Massive Multitask Language Understanding): Tests knowledge across 57 academic subjects — like history, math, law, and medicine. It’s like an AI SAT on steroids.

- ARC (AI2 Reasoning Challenge): Focuses on grade-school science questions to test logical and commonsense reasoning. Think of it as testing if the model can think through basic cause-and-effect.

- HellaSwag: Evaluates how well a model can pick the most plausible continuation of a story or situation from multiple choices. It’s about understanding everyday scenarios.

- Winogrande: A challenging commonsense reasoning task based on fill-in-the-blank sentences that require contextual understanding.

That’s the surprise:

- These are specific tests.

- They do not have replicating conversation flow, factuality, or the way your real users talk.

- Standards are the beginning and not the finish.

Want to try them yourself?

You can explore these benchmark datasets on Papers with Code to test LLMs interactively.

2. Most Important Metrics That Really Matter in Use

Here’s what leading engineers and product teams consider:

- Accuracy: Does it get things right without making things up

- Latency: Is it fast enough for production?

- Context window: Is it able to remember what you said to it two pages back?

- Token efficiency: Does it beat around the bush or get to the point?

- Factual consistency: Can it be trusted with your brand?

They decide whether your LLM is a liability or a superpower.

3. Get rid of Demos. Test It As If It’s In Production.

The real MVP test? Integrate it into your procedure:

- Coders: Make it your assistant in writing, debugging, and describing bugs in your real repo.

- Support teams: Decide if it can provide a polite response to an angry customer DM.

- Teachers: Decide if it can simplify concepts and identify if students are confused.

This is not brute force. It’s more about suitability.

4. Can It Follow Instructions and Remain Ethical?

Alignment is another aspect. Because a powerful model which cannot follow safe directions is just a ticking bomb.

Evaluate:

- Is it able to resist tricking with prompts?

- Is it handling the matter of sensitivities in a good way?

- Does it make sure that it will not be the generator of spam and of low-quality content?

Even the most proficient models are liable to errors. Don’t indulge in the use of advertising.

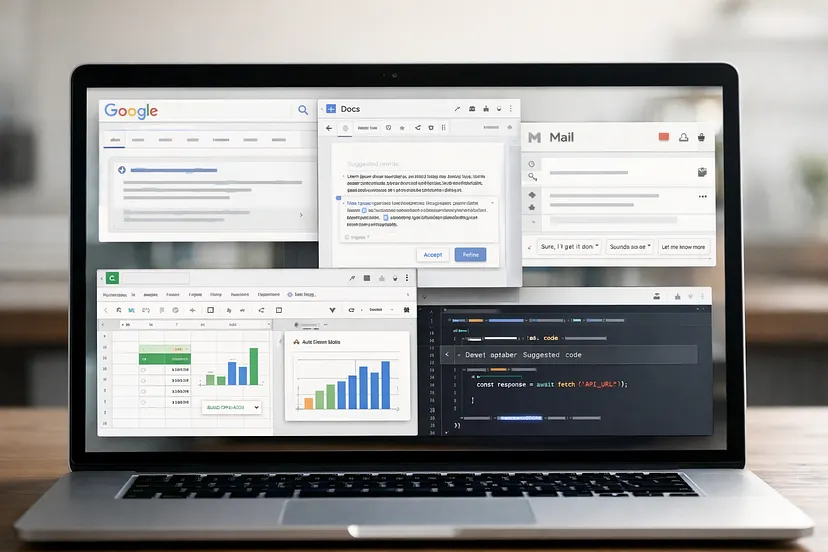

5. Multimodal Powers: Text Is Just the Beginning

In 2025, the biggest LLMs not only open their eyes but also ears, and soon they will also touch. GPT-4o and Gemini 2.5 Pro, for instance, launch their missions — multimodal ones.

For instance:

- Images, charts, photos — do they really get it?

- Testing the sound input — do they record the intonation or recognize different speakers?

- Requesting image + text production — can they find the similarities and differences?

Definitely, the future is not only smart — it’s the one with a strong sensory system

6. Cost vs Performance: The Unspoken Dealbreaker

Everyone wants the best model until the time of settling the payment arrives.

Main trade-offs:

- Examples of open source, such as Phi-3 or Mistral = more affordable, more personalized.

- Premium like GPT-4o or Gemini = best performance, safety layers.

Be careful for:

- Price for 1M tokens

- Hosting/inference infrastructure

- Tuning or customization needs

Your decision depends on whether you’re building an enterprise SaaS or a startup MVP.

7. Conclusion: Pick the Model That Fits You

There is no such “best” LLM. There’s only the top model for your situation, your finances, and your objectives.

So the next time you’re asked, “What is the best AI?” — you’ll know to ask a better question:

Best for what?

In a world where every LLM screams for attention, the smartest players will be the ones who stop chasing the hype-and start testing for fit.

Looking to build a high-performing remote tech team?

Check out MyNextDeveloper, a platform where you can find the top 3% of software engineers who are deeply passionate about innovation. Our on-demand, dedicated, and thorough software talent solutions are available to offer you a complete solution for all your software requirements.

Visit our website to explore how we can assist you in assembling your perfect team.